In my previous post, I said I was going to learn about AI, and I've been making progress.

Since "learning AI" is as generic as saying "learning Maths" or "learning medicine", I started narrowing my focus on one of the pillar of machine learning: Neural Networks and Deep Learning.

Neural Networks help to solve a particular set of problems without following a classical approach to programming.

Can you think of a way to code an algorithm to identify cats in images?

There are some ways, but they are very hard to develop, and with bad performance (high percentage of errors).

For the problem cat/non-cat, implementing a NN is a better approach by far. A Convolutional Neural Network, to be specific.

It wasn't my intention to design neural networks, but I wanted a good grasp about how they work under the hood. That knowledge will be essential to understand other topics in machine learning, including how LLM works.

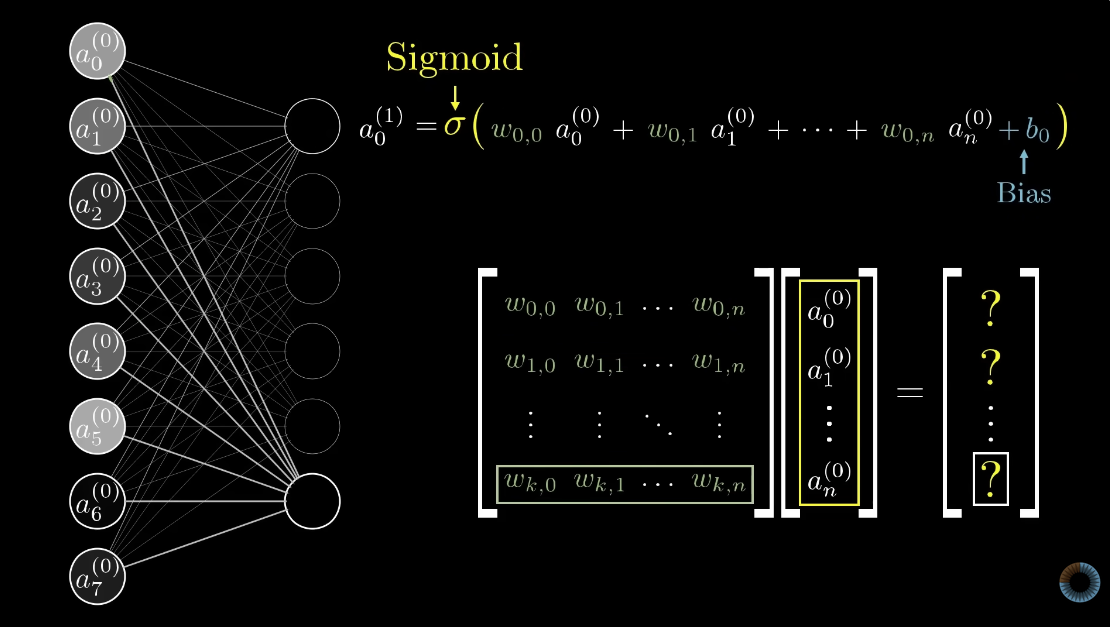

To gain a first intuition about NN, I watched (multiple times) the youtube series Neural networks - 3blue1brown.

I was blown away by the clear design of the videos and animation and the clarity of explanation and the narrative.

Grant Sanderson, the channel's author, should win the Oscar Awards in the video tutorial category! Unfortunately, such a category does not exist.

Grant is a mathematician who worked at Khan Academy as content creator, where he developed tools for creating math visualizations.

His goal is making complex topics easier to grasp by leveraging visual intuition. I watched multiple times the first 3 videos of the series Neural Network.

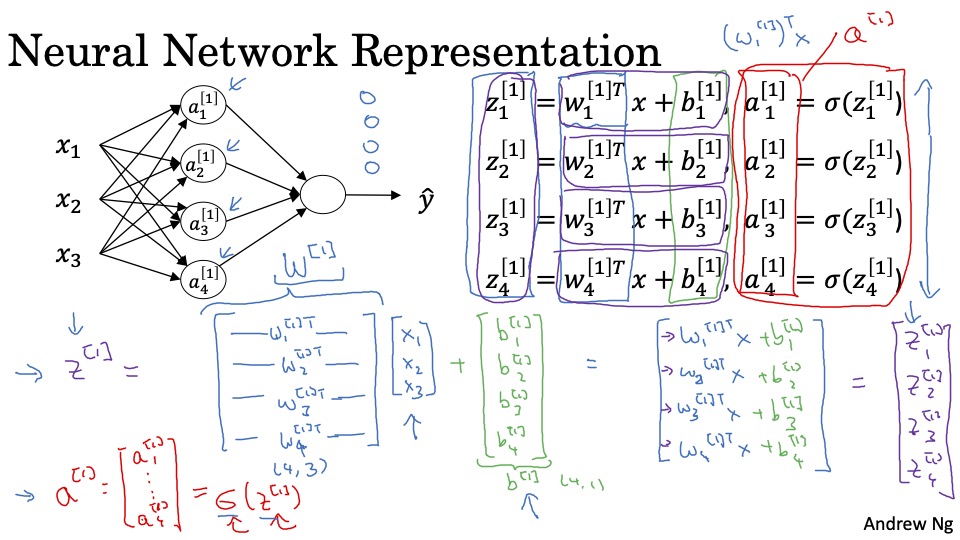

Then I enrolled in one of the classic NN Courses Neural Networks and Deep Learning by Andrew NG.

Andrew is a popular ML expert and online teacher who's helping make accessible Machine Learning via his courses.

This course is split in 4 weeks, but it took more than 8 weeks to finish the course to me!

Andrew's course was focused on equations, maths notation and implementation details.

After the first week I realised I needed to review my basics of algebra and calculus.

So I watched various videos on the The essence of calculus a series from the brilliant 3blue1brown.

To review my Algebra I watched some of the Khan Academy videos on linear-algebra in particular the one about matrix-vector multiplication

The gradient of a function (aka as partial derivatives) is a concept used in the training phase of the NN (the backpropagation phase). The gradients are used to adjust the weights and biases of the NN.

It is not essential to know how to calculate derivatives, but you should at least know what the gradient of a function is and why they are essential.

NN are represented using Matrix and vectors, so it's fundamental being comfortable with basic operations with Matrices and Vectors.

The first week of Andrew's course was easy to follow, I was confident to finish it in 4 or maybe 5 weeks.

But after the first week, when he introduced the vectorisation, the maths became more abstract, so I had to remind myself what all those letters (X, W, b, G, Z, ...) and indices in brackets meant.

Fortunately ChatGPT clarified concepts, with just a bit of context and a screenshot from the video course. Andrew's NN course is well known, the same notations is used in many online articles and LLMs absorbed that knowledge.

I finished the first course a week ago, and I gained good understanding on

- what a neuron does and what layers are

- what are the weights and biases

- activation functions

- forward propagation

- backward propagation and gradient descent

I also gained some practical skills on working with vectors and matrices using Numpy.

Next step

I am continuing my ML learning journey.

I found that MIT (Massachusetts Institute of Technology) has released the course 6.S191: Introduction to Deep Learning on youtube, so I'll watch some videos to see if I can follow along.